The living room has an interesting topology for multimodal interaction. Years ago, you got up and turned the dial directly on the TV. The advent of the remote control allowed you to do that from your seat. Even up to the early-mid 2000s, it was just the remote and TV in the living room – the landline and then cell phone worked separately in their own ecosystem. The introduction of the smartphone, tablet*, set-top boxes, smart TVs, and game consoles all started rapidly evolving the living room into an IP oasis.

Like the original remote and the TV, we hope these interactions create value, ease pain points, and don’t cause new problems. A new TV app might give you access to content not available anywhere else, but we don’t want to burden the user with a new task of typing in a long username and password on a virtual keyboard with a remote on the big screen, where everyone else in the room can see exactly what you’re typing.

User Controlling TV

The remote, it is well-established, can do simple button input: power, volume direction, channel direction, UI direction, number pad. This takes care of a lot of basic hardware control and UI navigation. Some new TVs and devices try a minimal approach, e.g. 2 buttons, and an air mouse (gyroscope) or mousepad; this alone can actually make things more difficult, so it is usually paired with a microphone for voice control.

More Complicated Input

When trying to do more complex input, such as a long text query or authentication, we’ll need that microphone or a device that is more commonplace for text input (i.e. smartphone). Over a local Wi-Fi network, casting a video is a great way to do all the work of pulling up something with ease on your phone, and at the last step handing it off to the TV for playback.

Voice control has certainly matured over time. Going from listening to very specific commands in a specific context (automated phone system, “tell me which number you choose”), to more complicated known query formats (“what year was Bill Murray born”), to an ideal world where open-ended queries can be understood by an AI. This really does hold people back in using voice products: the product can do some powerful queries, but they are in decision paralysis when asked to use it because they don’t specifically know the queries they can use. “What can I ask for? How do I need to ask for it?” These shouldn’t be a concern, and a longer conversation/series of queries should be able to build off each other.

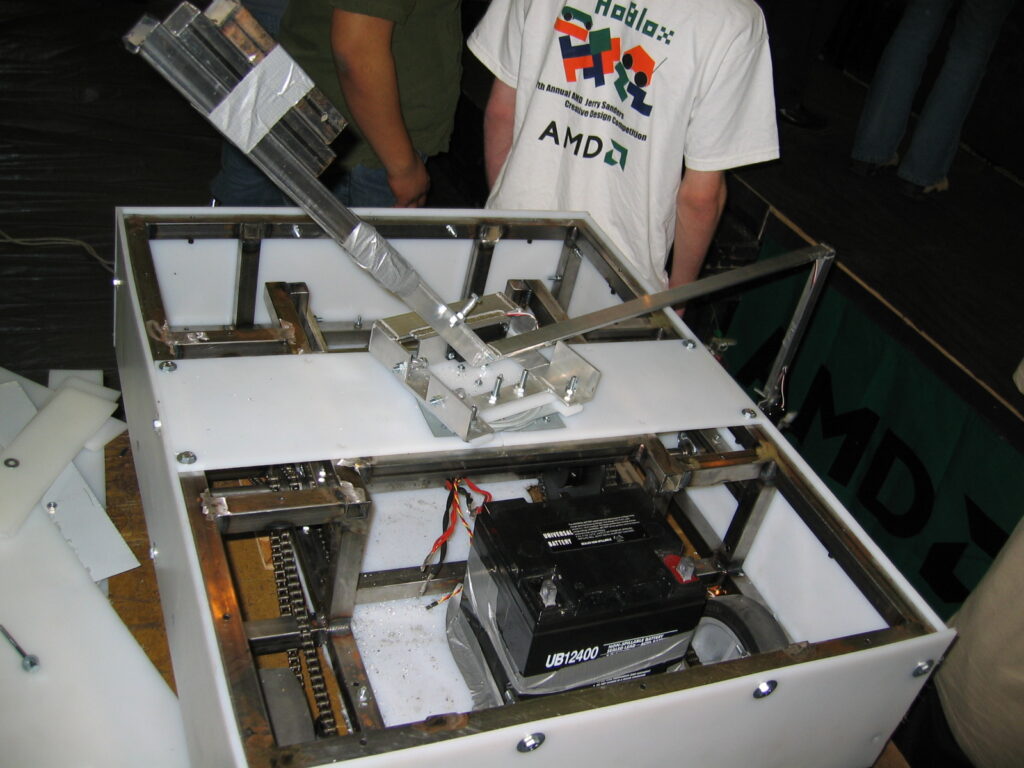

TV Sending Data back to User

This pathway hasn’t really been tapped into much. Users will tag/bookmark content, and that is then synced to a playlist on an account – there is no immediacy to this. More priority data exchange is familiar to many: QR code or website URL, where you enter a short code on the screen on your phone or desktop to sign in. One of the most seamless ways to handle this authentication.

ACR (see Shazam) is one way your digital device can make sense of the content being played on the TV. The audio/video or an invisible segment of it is recognized by the user’s app. Surprisingly accurate for certain scenarios.

Then there is the commerce portion I’ve worked on quite a bit. You’re browsing products on the TV and want to buy it on your phone. The system could: text a link, push a link/notification through a shared app (e.g. YouTube), or use QR codes to pass the link.

IRL Interactions

Of course, these are all digital interactions. You hear some (upper middle-class) people lament the loss of the non-digital social interactions, with the family all physically inhabiting the same living room space but each lost in their own world: handheld video games, texting friends, Facebook stalking, checking fantasy football scores in an app, homework on a laptop. IRL is important but not the focus here.

* I’m just going to bunch laptops in here. They can exist outside an affordable price range for lower income homes, whereas these other devices do not.